INSIGHTLLM: Intelligent System for Integrating Global Human & Animal Health Technology

Team Members

Jian Tao (Group Leader)

Assistant Professor in Visualization, Assistant Director at the Texas A&M Institute of Data cience, Affiliated Faculty at Department of Electrical & Computer Engineering, Department of Nuclear Engineering, and Department of Multidisciplinary Engineering

Dheeraj Mudireddy

Master’s Student, Data Science

Overview

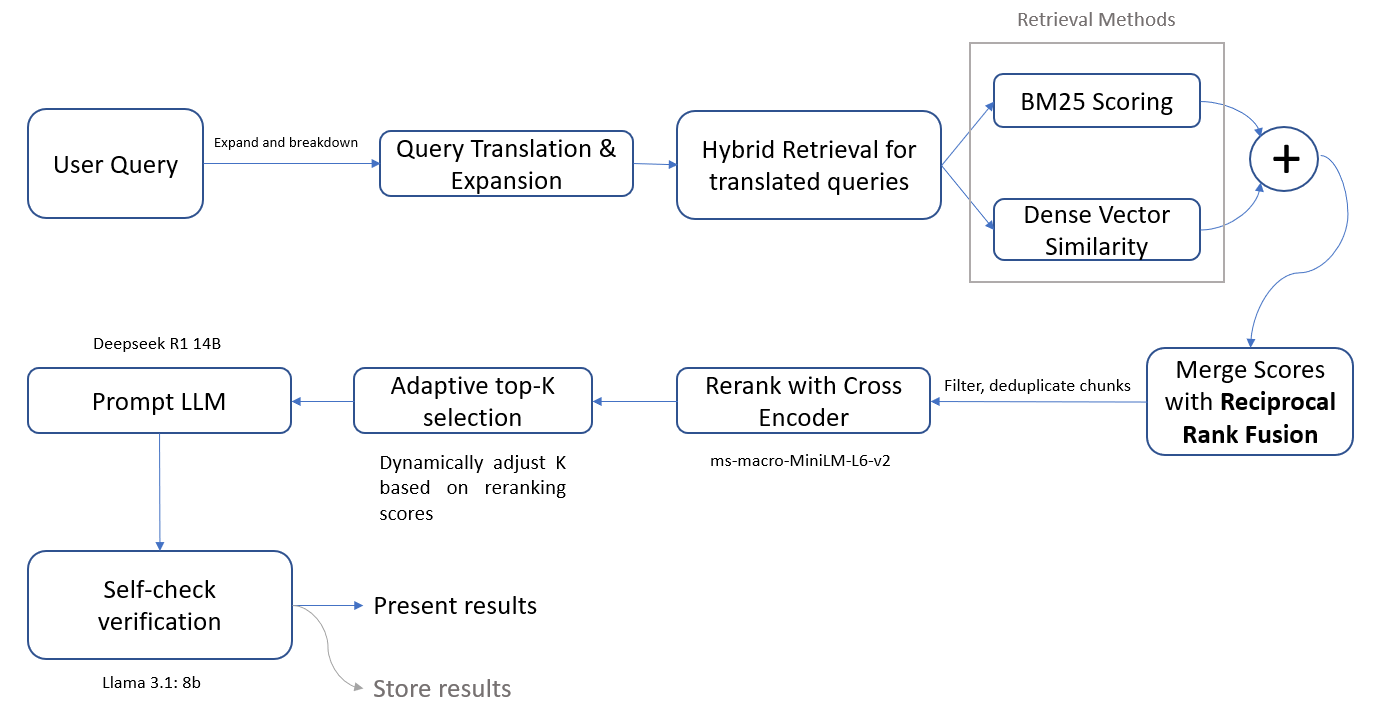

The INSIGHTLLM project aims to develop a Retrieval-Augmented Generation (RAG) system that serves as a bridge between animal science and human nutrition research. By leveraging the latest reasoning language models, various RAG techniques, and document retrieval techniques, the system delivers precise, cited responses to complex queries with respect to both domains.

It is designed to empower researchers with fast, contextualized access to a vast corpus of scientific literature, streamlining interdisciplinary insights.

Project Objectives

- Bridge the gap between animal science and human nutrition through AI

- Implement a custom RAG architecture tailored for scientific query resolution

- Enable citation-backed answers derived from peer-reviewed literature

- Support interdisciplinary research through smart document retrieval

- Design an intuitive user interface for query-answer interaction

- Facilitate reproducible, scalable deployment via modular components

Key Features

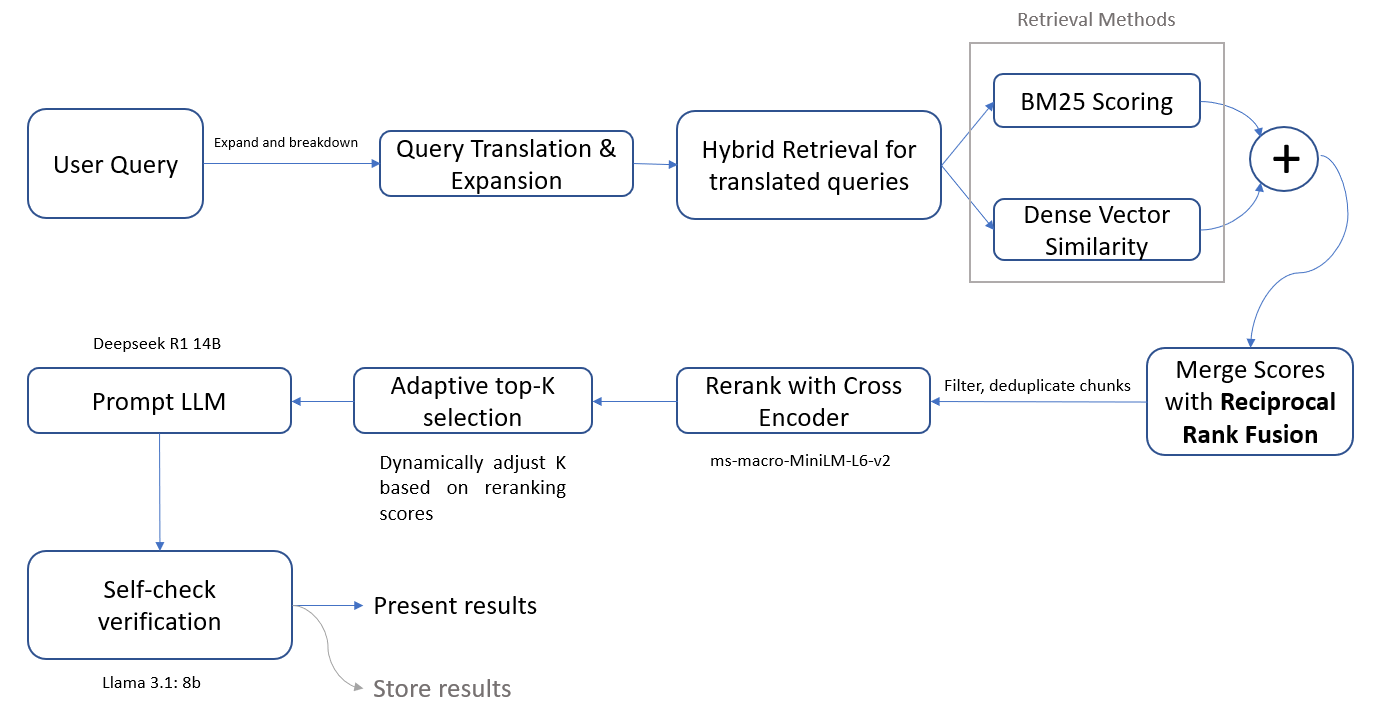

- Query Translation & Expansion: Prompted query is rewritten clearly and broken down into multiple sub-queries

- Hybrid Retrieval: Combines vector embeddings (dense) with BM25 keyword search (sparse)

- LLM Integration: Uses your local Ollama LLM (e.g., LLaMA3.1, DeepSeek-R1) to answer scientific queries

- Cross-Encoder Reranking: Improves result accuracy using semantic scoring (MiniLM L6 v2)

- Adaptive Top-k Selection: Top-k docs are selected upon reranking, given that k changes dynamically based on reranking scores

- Deduplication & Filtering: Ensures clean, diverse, and relevant chunk selection

- Chain-of-Thought Reasoning: LLMs are prompted to think step-by-step

- Self-Verification: Final answers are reviewed by a smaller lightweight LLM for consistency and accuracy, also called LLM-as-a-Judge

Important Links

Source Code Repository (WIP - access required)